In the modern era of data-driven decision-making, the abundance of data generated every day has necessitated the development of robust tools for processing, analyzing and deriving insights from these massive datasets. Java developers, with their proficiency in one of the most widely used programming languages, have a wide array of tools at their disposal to tackle the challenges of Big Data. Here, we delve into four of the top Big Data tools specifically tailored for Java developers: Apache Hadoop, Apache Spark, DeepLearning4j and JSAT.

-

Apache Hadoop: Best for distributed storage and processing large datasets

-

Apache Spark: Best for real-time data analytics and machine learning

-

DeepLearning4j: Best for Java developers looking to incorporate deep learning and neural networks

-

JSAT (Java Statistical Analysis Tool): Best for Java developers seeking a highly versatile machine learning tool

Jump to:

- Apache Hadoop

- Apache Spark

- DeepLearning4j

- JSAT (Java Statistical Analysis Tool)

- Final thoughts on Big Data tools for Java developers

Apache Hadoop: Best for distributed storage and processing large datasets

One of the main players in the Big Data revolution is Apache Hadoop, a groundbreaking framework designed for distributed storage and processing of large datasets. Java developers have embraced Hadoop for its scalability and fault-tolerant architecture.

Pricing

Apache Hadoop is open-source and free to use for commercial and noncommercial projects under the Apache License 2.0.

Features

Apache Hadoop has the following key features:

- Hadoop Distributed File System.

- MapReduce.

- Data locality.

HDFS, the cornerstone of Hadoop, divides data into blocks and distributes them across a cluster of machines. This approach ensures high availability and fault tolerance by replicating data blocks across multiple nodes. Java developers can interact with HDFS programmatically, storing and retrieving data in a distributed environment.

Hadoop’s MapReduce programming model facilitates parallel processing. Developers specify a map function to process input data and produce intermediate key-value pairs. These pairs are then shuffled, sorted and fed into a reduce function to generate the final output. Java developers can harness MapReduce’s power for batch processing tasks like log analysis, data transformation and more.

Hadoop relies on the concept of data locality to efficiently process data, making it quick at such tasks.

Pros

Apache Hadoop has the following pros:

- Fast data processing: Relying on the above mentioned HDFS, Hadoop is able to provide faster data processing, especially when compared to other, more traditional database management systems.

- Data formats: Hadoop offers support for multiple data formats, including CSV, JSON and Avro — to name a few.

- Machine learning: Hadoop integrates with machine learning libraries and tools such as Mahout, making it possible to incorporate ML processes in your applications.

- Integration with developer tools: Hadoop integrates with popular developer tools and frameworks within the Apache ecosystem, including Apache Spark, Apache Flink and Apache Storm.

Cons

While Hadoop is an integral tool for Big Data projects, it’s important to recognize its limitations. These include:

- The batch nature of MapReduce can hinder real-time data processing. This drawback has paved the way for Apache Spark.

- Apache Hadoop relies on Kerberos authentication, which can make it difficult for users who lack security experience as it lacks encryption at both the network and storage levels.

- Some developers complain that Hadoop is neither user-friendly nor code-efficient as programmers have to manually code each operation in MapReduce.

Apache Spark: Best for real-time data analytics and machine learning

Apache Spark has emerged as a versatile and high-performance Big Data processing framework, providing Java developers with tools for real-time data analytics, machine learning and more.

Pricing

Apache Spark is an open-source tool and has no licensing costs, making it free to use for programmers. Developers may use the tool for commercial projects, so long as they abide by the Apache Software Foundation’s software license and, in particular, its trademark policy.

Features

Apache Spark has the following features for Java developers:

- In-memory processing.

- Extensive libraries.

- Unified platform.

- Spark Streaming.

- Extensibility through DeepLearning4j.

Unlike Hadoop, which relies on disk-based storage, Spark stores data in memory, drastically accelerating processing speeds. This feature, coupled with Spark’s Resilient Distributed Dataset abstraction, enables iterative processing and interactive querying with remarkable efficiency.

Spark’s ecosystem boasts libraries for diverse purposes, such as MLlib for machine learning, GraphX for graph processing and Spark Streaming for real-time data ingestion and processing. This versatility empowers Java developers to create end-to-end data pipelines.

Spark unifies various data processing tasks that typically require separate tools, simplifying architecture and development. This all-in-one approach enhances productivity for Java developers who can use Spark for Extract, Transform, Load; machine learning; and data streaming.

Furthermore, Spark’s compatibility with Hadoop’s HDFS and its ability to process streaming data through tools like Spark Streaming and Structured Streaming make it an indispensable tool for Java developers handling a variety of data scenarios.

While Spark excels in various data processing tasks, its specialization in machine learning is augmented by DeepLearning4j.

Pros

Apache Spark has several pros worth mentioning, including:

- Speed and responsiveness: A key factor in handling large datasets is speed and processing ability. Apache Spark is, on-average, noted to be 100 times faster than Hadoop in terms of processing large amounts of data.

- API: Apache Spark has an easy-to-use API for iterating over big datasets, featuring more than 80 operators for handling and processing data.

- Data analytics: Apache Spark offers support for a number of data analytics tools, including MAP, reduce, ML Graph algorithms, SQL queries and more.

- Language support: The Big Data tool offers support not only for Java but also for other major languages, including Scala, Python and SQL.

Cons

Despite its many advantages, Apache Spark does have some notable cons, including:

- Lack of automations: Apache Spark requires manual coding unlike other platforms that feature automations. This leads to less coding efficiency.

- Lack of support for record-based window criteria.

- Lacking in collaboration features: Apache Spark does not offer support for multi-user coding.

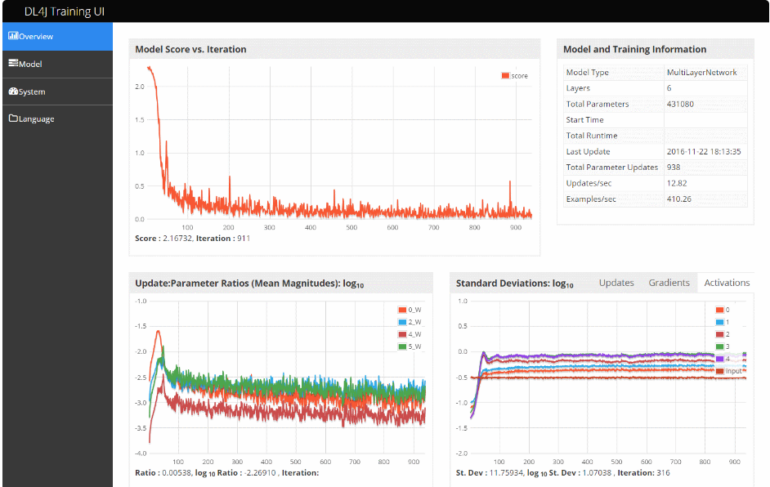

DeepLearning4j: Best for Java developers looking to incorporate deep learning and neural networks

As the realms of Big Data and artificial intelligence converge, Java developers seeking to harness the power of deep learning can turn to DeepLearning4j. This open-source deep learning library is tailored for Java and the Java Virtual Machine, enabling developers to construct and deploy complex neural network models.

Pricing

DeepLearning4j is another open-source offering and free to use for non-commercial and commercial purposes alike.

Features

- Support for diverse architectures.

- Scalable training.

- Developer tool integrations.

- User-friendly APIs.

DeepLearning4j supports various neural network architectures, including convolutional neural networks for image analysis and recurrent neural networks for sequential data. Java developers can harness these architectures for tasks ranging from image recognition to natural language processing.

With the integration of distributed computing frameworks like Spark, DeepLearning4j can scale training processes across clusters. This scalability is crucial for training deep learning models on extensive datasets.

DeepLearning4j offers seamless integration with popular developer tools like Apache Spark, making it possible to incorporate deep learning models into larger data processing workflows.

Java developers with varying levels of experience in deep learning can access DeepLearning4j’s user-friendly APIs to construct and deploy neural network models.

For Java developers who want a more general-purpose machine learning toolkit with a strong focus on optimization, JSAT is a valuable choice.

Pros

DeepLearning4j has a number of pros as a Big Data tool, which include:

- Community: DeepLearning4j has a large and thriving community that can offer support, troubleshooting, learning resources and plenty of documentation.

- Incorporates ETL within its library: This makes it easier to extract, transform and load data sets.

- Specializes in Java and JVM: This makes it simple to add deep learning features to existing Java applications.

- Support for distributed computing: Developers can use DeepLearning4j for predictive maintenance models concurrently across multiple machines, reducing load and resource consumption.

Cons

DeepLearning4j is not without its cons, which include:

- Known for a few bugs, especially for larger-scale projects.

- Lack of support for languages like Python and R.

- Not as widely used as other Big Data libraries, such as TensorFlow and PyTorch.

JSAT (Java Statistical Analysis Tool): Best for Java developers seeking a highly versatile machine learning tool

JSAT is a robust machine learning library tailored for Java developers. It empowers Java developers to explore and experiment with machine learning algorithms, offering a versatile toolkit for various tasks.

Pricing

Like other Big Data tools for Java on our list, JSAT is open-source and free to use.

Features

The Java Statistical Analysis Tool has a few features developers should be aware of, including:

- Algorithm diversity.

- Optimization focus.

- Flexibility.

- Integrations.

JSAT provides a wide array of machine learning algorithms, including classification, regression, clustering and recommendation. Java developers can experiment with different algorithms to identify the best fit for their use case.

JSAT is designed with optimization in mind. Java developers can fine-tune algorithm parameters efficiently, enabling the creation of highly performant models.

With JSAT, Java developers can construct custom machine learning solutions tailored to their specific requirements. This flexibility is vital when working with diverse datasets and complex modeling needs.

Java developers can seamlessly integrate JSAT with other Big Data tools like Spark and Hadoop, creating comprehensive data pipelines that encompass multiple stages of processing and analysis.

Pros

JSAT’s pros include:

- Lightweight: JSAT is a small, lightweight Java library that is easy to use and understand, making it suitable for programmers of all levels.

- Written in Java: JSAT is an ideal choice for developers who code mostly in Java or Kotlin.

- Numerous algorithms for data processing: Despite its size and simplicity, JSAT features a large number of algorithms for processing data and performing statistical data analysis.

- Support parallel execution and multithreading: JSAT is a speedy option for programs that are built for concurrency.

Cons

JSAT does have a few cons we should mention and developers should consider. These include:

- Less community support and learning resources than some of its peers.

- May not support newer versions of Java but still a worthwhile option for legacy systems.

Final thoughts on Big Data tools for Java developers

The Big Data landscape offers Java developers a myriad of tools to tackle the challenges of processing and deriving insights from vast datasets. Apache Hadoop and Apache Spark provide scalable, distributed processing capabilities, with Spark excelling in real-time analytics. DeepLearning4j caters to developers interested in deep learning and neural networks, while JSAT empowers Java developers with a versatile machine learning toolkit.

With these tools at their disposal, Java developers are well-equipped to navigate the complexities of Big Data and contribute to the advancement of data-driven solutions across industries.